You know that moment when you’re running a perfectly functional self-hosted stack, and you think “This could be better”? Yeah, that was me a few weeks ago. What started as a simple database migration turned into a full-blown DevOps adventure complete with firewall lockouts, authentication failures, and AWS deciding to have a bad day.

Here’s the real story of how I went from manual deployments to a proper CI/CD pipeline – including all the stuff that broke along the way.

The Starting Point: Too Much Self-Hosting

I had this setup running on an EC2 instance – n8n for automation workflows, a containerized Qdrant vector database, the whole nine yards. It worked, but maintaining that Qdrant container was becoming a pain. Every time I needed to make changes, it was SSH into the server, edit docker-compose files by hand, pray nothing broke.

Not exactly the kind of workflow you want to show enterprise clients.

Phase 1: Ditching Qdrant for Supabase

First order of business was getting rid of the self-hosted Qdrant database. Don’t get me wrong – Qdrant is solid – but I wanted something managed, something with the power and familiarity of PostgreSQL.

Enter Supabase with pgvector. Same vector search capabilities, but now with all the PostgreSQL features I actually know how to optimize.

The migration was pretty straightforward:

- Nuked the Qdrant service from docker-compose.yml

- Spun up a new Supabase project

- Enabled the pgvector extension

- Created the documents table with the same structure I had before

- Built a custom search function for filtered similarity searches

The real win here wasn’t just the managed service – it was having a proper SQL interface for debugging. Way easier to troubleshoot vector searches when you can write actual SQL queries.

Phase 2: GitHub Actions – Because Manual Deployments Suck

With the database sorted, I decided to tackle the deployment workflow. I was tired of SSHing into servers and manually running docker compose up -d like some kind of caveman.

Time to set up proper CI/CD with GitHub Actions.

Setting Up SSH Access (The Right Way)

Generated a dedicated SSH key pair specifically for GitHub Actions. Added the public key to the n8nuser account on the server – not ubuntu, because that user actually owns the application files. Security 101: least privilege principle.

Stored all the connection details as GitHub secrets:

EC2_HOST: Server IPEC2_USERNAME:n8nuserWORK_DIR:/mnt/appdata/app_configsEC2_SSH_PRIVATE_KEY: The full private key

The Workflow

Created a simple but effective GitHub Actions workflow. Any push to main triggers:

- Checkout the code

- Copy files to the server via SSH

- Run

docker compose up -d

Clean, simple, automated.

Phase 3: When Everything Goes Wrong

Of course, nothing ever works on the first try. Here’s where things got interesting.

Problem #1: The Great Firewall Lockout

First run of the GitHub Action? Complete failure. Connection timeout on port 22.

Turns out my Terraform-managed Security Group was only allowing SSH from my personal IP. GitHub Actions servers? Yeah, they come from random IPs all over the place.

Solution: Updated main.tf to add a second SSH ingress rule allowing 0.0.0.0/0. Not ideal from a security standpoint, but necessary for automation. Sometimes you have to make trade-offs.

Problem #2: The Authentication Dance

Fixed the firewall, ran it again. Now I’m getting ssh: unable to authenticate.

Rookie mistake: I had EC2_USERNAME set to ubuntu in GitHub secrets, but the SSH key was in n8nuser‘s home directory.

Solution: Updated the GitHub secret to match reality. Always double-check your assumptions.

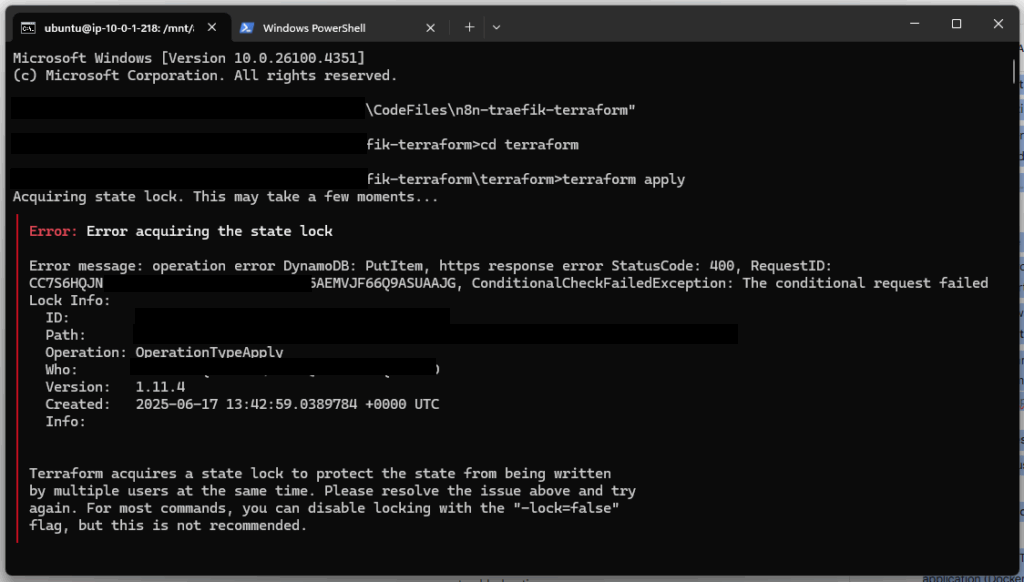

Problem #3: AWS Has a Bad Day

While I’m troubleshooting the firewall issue, the entire server just… disappears. Can’t SSH, can’t hit the n8n domain, nothing. AWS console shows “Instance reachability check failed.”

For a hot minute, I thought I’d completely broken something with my Terraform changes. Started questioning every decision I’d made in the past week.

Plot twist: This was completely unrelated to anything I was doing. The underlying AWS hardware had failed. Sometimes the cloud provider is just having issues, and there’s nothing you can do about it.

Solution: Rebooted the instance from the AWS console, which forced a migration to healthy hardware. Everything came back online immediately. The restart: unless-stopped policy in docker-compose meant all services started automatically.

The Lessons (Because There Are Always Lessons)

Git as Single Source of Truth

Having your infrastructure config in Git prevents that dreaded “configuration drift” where your live system stops matching what you think it should be. Plus, you get an audit trail of every change.

Isolate Your Problems

When something breaks, figure out if it’s your code, your automation, your infrastructure, or the cloud provider. Don’t assume it’s always your fault (but start there).

Least Privilege Actually Matters

Using n8nuser instead of ubuntu for automation isn’t just security theater – it prevents a whole class of permission issues down the road.

Cloud Infrastructure Can Fail

Even AWS has bad days. Know how to use your provider’s health checks and diagnostic tools. Sometimes the problem really isn’t you.

Idempotency is Your Friend

Both Terraform and Docker Compose are designed to be run repeatedly without breaking things. This makes automation safe and reliable – you can always re-run your deployment.

The End Result

Now I’ve got a clean, automated pipeline. Push to main, changes get deployed automatically. The database is managed by people who know more about running databases than I do. And when something does break, I’ve got proper logging and monitoring to figure out what went wrong.

Is it perfect? No. But it’s professional, reliable, and I can actually show it to enterprise clients without being embarrassed.

Plus, I learned a ton about Terraform, GitHub Actions, and why you should never trust that everything will work on the first try.

Building reliable DevOps pipelines is 20% knowing the tools and 80% knowing how to troubleshoot when they inevitably break. Plan for failure, because it’s coming.