You know that moment when you’re staring at three different cloud platforms, each promising to be the future of AI development, and you start second-guessing everything you thought you knew? Yeah, that was me a few weeks ago. I found myself caught in the classic developer trap: shiny new tool syndrome.

Azure AI Foundry had just hit general availability with its slick interface that made everything look so easy. Google’s Agent Development Kit was tempting me with its MCP (Model Context Protocol) capabilities. And there I was, an AWS-certified professional, wondering if the grass was greener on the other side.

Here’s how I found my way back to what I should have trusted all along—and discovered that AWS was way ahead of me.

The Great Cloud Platform Evaluation

Let me paint the picture of my platform paralysis. Azure AI Foundry looked incredible in the demos. That polished UI made me think, “Maybe this is where the future of AI development is heading.” The drag-and-drop simplicity was seductive, and I’ll admit it—I got caught up in the presentation.

I spent significant time testing Azure’s offering and wrote about my full experience here. Spoiler alert: it wasn’t quite ready for prime time. The streaming/tracing limitations alone were deal-breakers for production applications.

Google’s ADK had me curious too, especially with its MCP capabilities. The idea of standardized model-context protocols was genuinely interesting from an integration standpoint.

But then I had a moment of clarity during some small project management reflection time. I realized I was chasing features instead of focusing on fundamentals. I had learned AWS for a reason. They’re the largest cloud provider, their AI services are mature and battle-tested, and—most importantly—people will want AI agents on AWS regardless of what small advantages other platforms might have.

That’s when it hit me: tracing is really just logs with different properties and guidelines. The fancy interfaces are nice, but they don’t fundamentally change what you can build. AWS might not have the shiniest UI for every new AI feature, but they have the infrastructure, the ecosystem, and the enterprise trust that actually matters.

The AWS Discovery That Changed Everything

So I committed. I decided to stop platform-hopping and go deep on AWS. And honestly? I can’t believe I doubted them.

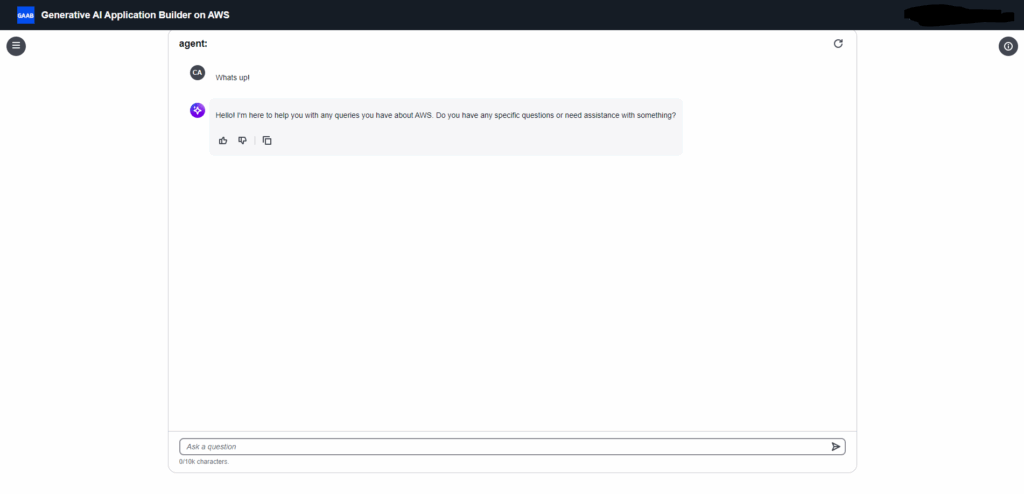

Within days of making this decision, I discovered something that completely changed my perspective: AWS Generative AI Application Builder. This isn’t some experimental beta feature—it’s a comprehensive solution that facilitates the development, rapid experimentation, and deployment of generative AI applications without requiring deep AI experience.

The solution accelerates development by helping you:

- Ingest your business-specific data and documents

- Evaluate and compare LLM performance

- Run multi-step tasks and workflows with AI agents

- Rapidly build extensible applications with enterprise-grade architecture

What really impressed me was the integration depth. It works seamlessly with Amazon Bedrock LLMs, SageMaker AI deployments, Bedrock Knowledge Bases for RAG, Bedrock Guardrails for safety, and Bedrock Agents for agentic workflows. Plus, it supports custom model connections through LangChain connectors in Lambda functions.

This wasn’t just a nice UI slapped on top of existing services—this was a thoughtfully designed platform for building production AI applications.

Hands-On Implementation Journey

I decided to dive in with the CloudFormation template deployment. The beauty of this approach is that it sets up the entire infrastructure stack with proper security, VPC configuration, and all the necessary components for building secure AI agents.

Once deployed, I had access to a pre-built UI for testing, but that’s where things got really interesting. I took it a step further and created a Lambda function with the necessary security policies to invoke my agent programmatically.

The setup allows me to send a message from n8n, make an HTTP request to the Lambda function, wait for the agent’s response from the streaming endpoint, and return the result. It sounds simple, but the implications are huge.

This integration opens up so many possibilities. I can now trigger AI agents from any workflow, integrate them into client systems, and build conversational interfaces that tap into the full power of AWS’s AI ecosystem. The streaming endpoint means real-time responses, and the security model ensures everything stays locked down properly.

Real-World Validation: The Taco Bell Case Study

Here’s where timing got really interesting. Two days after my AWS agent deployment testing, I came across an article about how Taco Bell used AI to boost sales by 8%. They needed to improve shift scheduling and inventory management without reducing staff or compromising customer service.

Their solution? An AI-powered coach that helps managers with staff scheduling, optimizing store hours, and flagging inventory requirements. They also integrated AI voice technology into 500 drive-through lanes to streamline order-taking and support staff. The results speak for themselves: 8% sales increase and managers who could respond better to variable factors like employee absences or local competitor hours.

But here’s what really caught my attention—how does a national chain like Taco Bell offer such sophisticated agentic features across thousands of locations? The cloud. And when I looked up their cloud provider, the answer was right there in the search results: they’re an AWS customer, working with AWS partner Lumigo for their observability needs.

This wasn’t just validation of AI agents as a concept—it was validation that AWS is where enterprise-scale AI deployment happens. Taco Bell achieved over 80% reduction in Mean Time To Resolve issues using AWS infrastructure for their tech-forward approach to managing 7,000+ restaurants.

When I see real companies getting real results with AI agents on AWS, it reinforces that I’m building skills in the right direction.

Why This Is “My Sauce”

After all this exploration and hands-on work, I’m convinced that AI agents on AWS is where I want to focus my expertise. Here’s why:

Market Reality: AWS is the largest cloud provider with the most mature ecosystem. Enterprise customers trust AWS infrastructure, and that trust extends to AI workloads.

Practical Advantages: While Azure might have a prettier interface for some features, AWS has the depth of services, the integration possibilities, and the proven scalability that real applications need.

Business Opportunity: Companies will want AI agents on AWS the same way they’ll want them on Azure. The platform choice often comes down to existing infrastructure and comfort level, not which one has the shiniest demo.

Personal Growth: I learned AWS for a reason. Instead of chasing every new platform, doubling down on AWS expertise means I can build deeper, more valuable skills.

I have a lot of runway with this, and I don’t exactly know what I’m going to do with it yet. But I know this is my sauce—this is what I want to get good at. The Generative AI Application Builder gives me a solid foundation to explore all the agent building I’ve done for small businesses and see how deploying large scale agents could be my next specialty.

What’s Next

This is just the beginning. I’ll have plenty more blogs on AWS AI agents moving forward as I explore what’s possible with this platform. The combination of Bedrock’s AI services, Lambda’s compute flexibility, and the Application Builder’s infrastructure makes for a powerful development environment.

The real test will be building something meaningful with these tools—something that solves actual business problems the way Taco Bell solved theirs. That’s where the rubber meets the road, and that’s what I’m excited to explore.

Have you been wrestling with similar platform decisions for your AI projects? I’d love to hear about your experiences and what factors ultimately drove your choice. The AI landscape is moving fast, but sometimes the best strategy is picking a solid foundation and going deep rather than chasing every shiny new option.

What challenges are you facing with AI agent development, and how are you thinking about platform selection? Drop a comment and let’s figure this out together.

Building AI solutions that work in production means making smart choices about platforms and tools. Sometimes the familiar choice is the right choice—especially when it comes with enterprise-grade infrastructure and a proven track record.