You know that sinking feeling when a customer calls to tell you they can’t access something, and you realize you had no idea it was broken? That was me last week when AWS decided to have hardware issues with my EC2 instance.

A simple phone call about a broken form turned into the wake-up call I needed about monitoring. Here’s how my attempt to “just set up some basic monitoring” became an unexpected journey through Docker permissions, Snap package gotchas, and why sometimes the simple solution is the one that actually works.

The Phone Call That Changed Everything

Picture this: I’m having a perfectly normal Tuesday when my phone rings. Client on the other end, politely asking why their form isn’t working. My first thought was “probably just a browser issue” until I tried to access the service myself.

Nothing. Complete silence from the server.

Turns out AWS had some underlying hardware issues that required a reboot, and I had zero visibility into it. No alerts, no notifications, just me looking completely unprofessional because I found out from a customer that my infrastructure was down.

That’s the moment you realize monitoring isn’t optional—it’s the difference between being proactive and being embarrassed.

The “This Should Be Simple” Trap

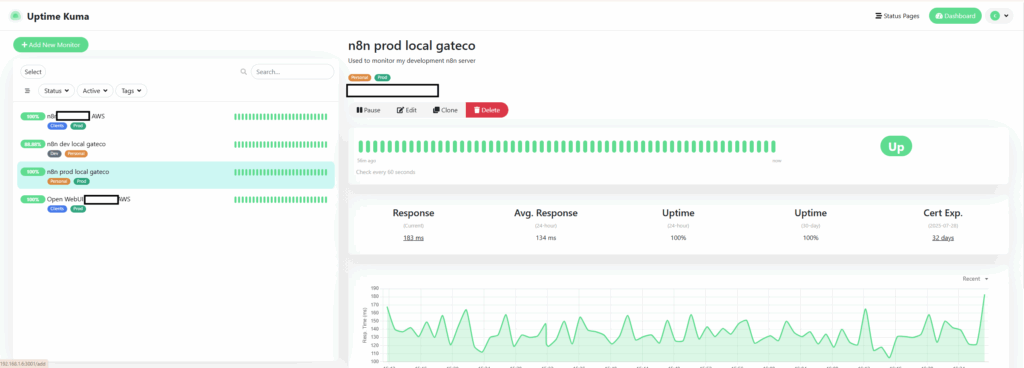

I’d heard good things about Uptime Kuma. Self-hosted, clean interface, designed for exactly this kind of problem. My plan was straightforward: deploy it locally for testing, then use it to monitor the actual client services running on AWS.

The Docker Compose setup looked clean enough. Container deployed successfully, everything seemed fine. Then I tried to access the web interface and hit my first reality check—the host firewall was blocking the port. Classic mistake that somehow always catches me off guard.

Got that sorted and felt pretty good about myself. Until I tried to actually monitor something.

Down the Docker Socket Rabbit Hole

This is where things got educational. I wanted to test monitoring a local container first before pointing it at production services. Selected “Docker Container” as the monitor type and immediately got hit with “No Docker Host Available.”

The Docker socket was mounted correctly in the compose file, so what was the problem?

Turns out there’s this whole permission dance between the container user, the host’s docker group, and the socket file ownership. I spent way too much time trying different user configurations, group permissions, security options—basically fighting the system at every turn.

Then I discovered the real plot twist: Docker was installed as a Snap package. And Snap packages live in their own heavily sandboxed world that doesn’t play nice with traditional permission models.

No matter what I tried, the Snap sandbox was preventing reliable communication between containers. It was like trying to have a conversation through three layers of bulletproof glass.

The Strategic Pivot

Here’s where I made the smart decision instead of the stubborn one. Rather than spend hours trying to break through Snap’s security model, I stepped back and thought about what I actually needed.

Monitoring containers via their network endpoints works exactly the same as monitoring remote services. Find the container’s internal IP, point an HTTP monitor at it, done. Simple, reliable, doesn’t require fighting system security policies.

Sometimes the obvious solution is the right solution.

The Real Challenge: Cloudflare WAF

Now for the important part—monitoring the actual client services running on AWS behind Cloudflare’s WAF protection. Great for security, potential nightmare for automated monitoring tools.

Set up a basic monitor pointing at the public domain. It worked initially, but I knew this was a ticking time bomb. Cloudflare’s bot protection is specifically designed to challenge repeated automated requests from single IP addresses—which perfectly describes a monitoring tool.

The solution involved creating a custom WAF rule that uses both IP address and a secret User-Agent header. Kind of like a two-factor authentication system for monitoring traffic. Secure enough to keep the bad guys out, specific enough to let monitoring through.

What Actually Works in Production

After all that learning the hard way, my final setup is refreshingly simple. Basic Uptime Kuma deployment without any of the Docker socket complexity. Local testing uses network-based monitoring. Production services get monitored through carefully configured Cloudflare exceptions.

The whole experience reminded me of something I keep learning over and over: the fancy solution isn’t always the right solution. Sometimes the tool that works reliably beats the tool that does everything perfectly in theory.

The Lessons Nobody Warns You About

Snap Packages Have Hidden Gotchas – That “easier” installation method can create harder operational challenges down the road. The sandboxing is more aggressive than you might expect.

Firewall Basics Still Matter – Docker port mapping gets your container talking to the host, but it doesn’t magically create firewall exceptions. I should know this by now, but it still trips me up.

Know When to Stop Fighting – I probably spent two hours trying to make Docker socket monitoring work when network monitoring was always the better approach. Sometimes the system is telling you something important.

Monitoring Security Is Real – WAF protection that stops monitoring tools is working as designed. The trick is creating controlled exceptions that don’t compromise the actual security.

This whole project started with embarrassment over missed downtime and ended up being a crash course in Linux permissions, container security, and network monitoring. Plus, now I can sleep better knowing I’ll get an alert before my customers do.

The Bigger Picture

This monitoring setup is just the foundation. The real value comes when you can respond to issues faster than your customers can notice them. Next step is connecting these alerts to automated responses—maybe auto-restart services, send targeted notifications, or escalate based on severity.

The infrastructure for reliable client services is never “done,” but at least now I have eyes on what’s happening when AWS decides to have another bad day.

Have you been caught off guard by infrastructure failures? I’d love to hear about your monitoring setups and whether you’ve run into similar permission issues with Docker. What’s your approach to monitoring services behind WAF protection?

And if anyone else has been surprised by Snap package limitations, I’d love to commiserate. Sometimes the “easier” path creates the most interesting challenges.

Building reliable infrastructure for client work means accepting that you’ll find out about problems in the most embarrassing ways possible—until you don’t. Sometimes the best monitoring setup is the one that actually works, not the one that looks the most impressive on paper.