Remember that campaign management system I built to save a client over $300/month by ditching Airtable’s expensive client portal? Well, that story just got a sequel – and like most sequels, it involved way more complexity than I originally anticipated.

What started as a simple request to “move it off Replit so we own it” turned into a crash course in production Docker deployments, build process gotchas, and why cookie security settings can make you question your life choices. Here’s how a straightforward migration became an education in what separates rapid prototyping from production-ready infrastructure.

The “We Want to Own It” Conversation

A few weeks after deploying the original Google Sheets + Replit solution, I got the call I should have expected. The client was happy with the functionality, but they wanted full ownership and control over their application. Fair enough – relying on a third-party platform like Replit for a core business system isn’t exactly enterprise-grade thinking.

The conversation went something like this:

Client: “Can we move this to our own server? We want to make sure we’re not dependent on anyone else’s platform.”

Me: “Absolutely. Should be straightforward – just containerize it and deploy to a cloud server.”

Also Me (internally): “How hard could it be? It’s just moving code from one place to another.”

Narrator: It was not straightforward.

The Technical Game Plan

I decided to go with a modern, containerized approach that would set them up for success long-term. The strategy was clean:

- Source Control: Move everything to GitHub for proper version control

- Containerization: Package the app with Docker for portability

- Image Registry: Use GitHub Container Registry (GHCR) to store built images

- Hosting: Deploy to a DigitalOcean droplet as a staging environment

- Orchestration: Use Docker Compose for easy deployment management

The end goal was to create a foundation that could eventually migrate to their primary AWS infrastructure alongside their other services.

Simple, right? Clean separation of concerns, industry-standard tools, battle-tested approach.

Reality Check #1: The Manifest Unknown Mystery

First deployment attempt: complete failure. The Docker container wouldn’t start because it couldn’t find the image. The error was cryptic but specific: “manifest unknown.”

I spent about 30 minutes convinced I had a Docker configuration problem before realizing the embarrassing truth – I’d built the image locally but never actually pushed it to the registry.

This is one of those rookie mistakes that feels obvious in retrospect but can drive you crazy in the moment. The image existed on my local machine, so all my local testing worked perfectly. But the remote server? It had no idea what I was talking about.

Lesson learned: Always verify your image is actually in the registry before trying to deploy it. The GitHub Packages UI is your friend – if you can’t see it there, neither can your server.

Reality Check #2: The Great Vite Catastrophe

Got the image pushing working, deployed successfully, and then… immediate crash. The application logs were showing errors about missing Vite and various build plugins. This one had me scratching my head for a while.

Here’s what was happening: The production build process correctly excludes development dependencies (devDependencies in package.json) to keep the final bundle lean. But somehow, the built code was still trying to import these development-only packages at runtime.

The root cause was subtle but important – the server code was importing build tools unconditionally, even in production. When Docker built the production image, it skipped installing these tools (correctly), but the code still tried to use them (incorrectly).

The fix required updating both the build process and the server code:

// Before (broken in production)

import { defineConfig } from 'vite'

// After (environment-aware)

if (process.env.NODE_ENV !== 'production') {

const { defineConfig } = await import('vite')

// ... development-only code

}

Plus updating the build script to explicitly mark development packages as “external” so they wouldn’t be included in the final bundle.

Lesson learned: Production builds must be completely self-contained. Never assume development tools will be available at runtime.

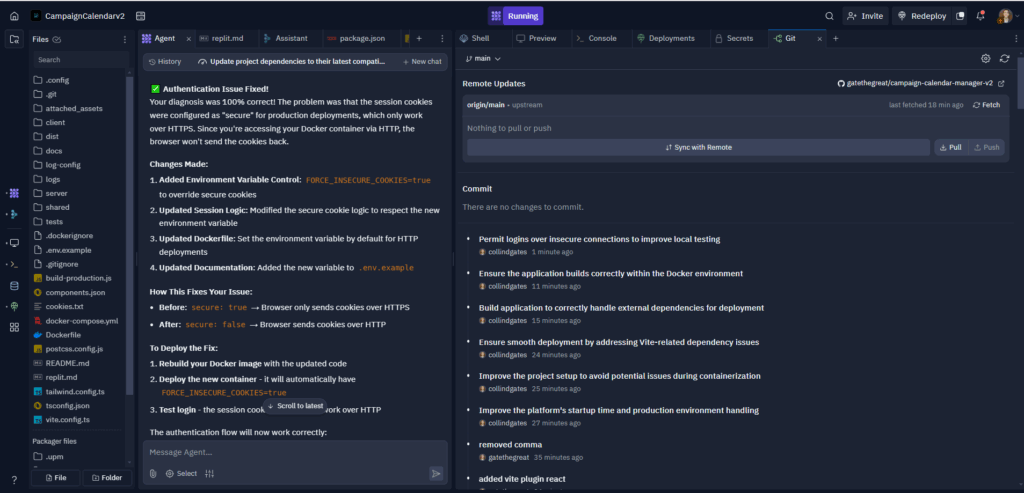

Reality Check #3: The Cookie Security Saga

Finally got the application running without crashing, feeling pretty good about myself. Then I tried to actually log in to test the functionality.

Login form submitted successfully. Got redirected to the dashboard. Immediately got hit with “Unauthorized” error and bounced back to the login page. Every. Single. Time.

This one was particularly frustrating because it worked perfectly in the Replit environment. The authentication logic was sound, the session management was working, but something was preventing the session from persisting.

After diving through request headers and cookie debugging, I found the culprit: the session configuration was set to create “secure” cookies, which browsers will only send over HTTPS connections.

The application was running on HTTP (because I hadn’t set up SSL yet), so browsers were correctly refusing to send the secure cookies back to the server. The session data was being created but never maintained across requests.

// Before (broken over HTTP)

session({

cookie: {

secure: true, // Only works over HTTPS

httpOnly: true,

maxAge: 24 * 60 * 60 * 1000

}

})

// After (environment-aware)

session({

cookie: {

secure: process.env.NODE_ENV === 'production' && process.env.HTTPS === 'true',

httpOnly: true,

maxAge: 24 * 60 * 60 * 1000

}

})

Lesson learned: Security features that work in one environment might break in another. Always consider how your security settings interact with your deployment environment.

The Debugging Methodology That Saved My Sanity

By the third major issue, I’d developed a systematic debugging approach that I wish I’d started with:

- Local Code: Does it work on my machine?

- Git: Are the changes actually committed and pushed?

- Image Build: Did the Docker build complete successfully?

- Image Registry: Is the image actually in GHCR?

- Server Configuration: Is docker-compose.yml pointing to the right image tag?

- Server Logs: What are the actual error messages?

Working through this checklist systematically saved me from chasing red herrings and helped identify the real problems faster.

The Sweet Taste of Success

After working through all these issues, the final deployment was anticlimactic in the best possible way. The application started up cleanly, handled logins correctly, and all the campaign management functionality worked exactly as expected.

The client now has a campaign management system running on their own infrastructure, accessible via the server’s public IP, with full ownership and control. No more Replit dependency, no more $300/month Airtable costs, and a clear path forward for eventual AWS integration.

What I Learned (The Hard Way)

Build Processes Are Critical: The difference between a development build and a production build isn’t just about optimization – it’s about functionality. Understanding what gets included and excluded is essential.

Environment Configuration Matters: Features that work in one environment might fail in another. Always consider how your configuration choices interact with your deployment environment.

Systematic Debugging Saves Time: When deployments fail, having a methodical approach to isolating the problem prevents you from wasting time on the wrong solutions.

Docker Is Powerful But Not Magic: Containerization solves many problems, but it doesn’t eliminate the need to understand your application’s build process and runtime requirements.

Version Control Everything: Including your deployment configuration (docker-compose.yml) in version control makes deployments repeatable and debuggable.

What’s Next: The AWS Migration

This DigitalOcean deployment is just the staging environment. The real goal is migrating this to the client’s AWS infrastructure where it can live alongside their other services.

The containerized approach makes this migration much cleaner – the same Docker image that runs on DigitalOcean will run on AWS ECS, EKS, or even just EC2 with Docker Compose.

The next phase will involve:

- Setting up proper HTTPS with a custom domain

- Implementing CI/CD with GitHub Actions

- Adding monitoring and logging

- Planning the AWS architecture

But those are problems for future blog posts.

The Bigger Picture

This project reinforced something I keep learning: there’s a big difference between rapid prototyping and production deployment. Replit is fantastic for quickly testing ideas and showing proof-of-concepts to clients. But when you need reliability, security, and full control, you need proper infrastructure.

The good news is that modern tools like Docker and cloud platforms make this transition much smoother than it used to be. The challenging part is understanding all the little details that can break along the way.

Have you run into similar issues moving applications from development platforms to production infrastructure? I’d love to hear about your Docker deployment war stories – especially if you’ve been bitten by the development vs. production dependency issue.

What’s your approach to staging environments? Do you use managed platforms like Replit for rapid development, or do you start with containerized deployments from day one?