You know that moment when you read the Azure Container Apps documentation and think “this looks straightforward”? Yeah, that was me last week. FastMCP server working locally, Docker container running perfectly, Azure deployment guide looking clean and simple.

Then I tried to actually deploy it.

Port binding conflicts. Authentication failures that made no sense. Container logs showing “unhealthy” with zero context about why. And my personal favorite: spending two hours debugging why my MCP server was accessible but not responding, only to discover Azure was routing traffic to port 80 while my container was listening on 8000.

Here’s how I went from “just deploy the container” to actually understanding how Azure Container Apps works with Python MCP servers—including all the stuff that broke along the way.

The Goal: Get Claude Talking to an IT Ticket System

Simple concept: Build an MCP server that lets Claude create IT tickets directly instead of making users copy-paste everything into a form. Five tools, FastMCP for the protocol handling, containerized deployment so clients don’t need to run anything locally.

Stack:

- FastMCP (Python wrapper for the MCP protocol)

- Azure Container Apps (managed container orchestration)

- Azure Container Registry (image storage)

- n8n webhooks as the backend (because I’m an n8n specialist and it’s the fastest way to prototype)

Expected flow:

- User describes issue to Claude

- Claude calls MCP server tools to gather valid options

- Claude creates properly categorized ticket

- User gets ticket number instantly

What could go wrong?

Phase 1: FastMCP Server (The Part That Worked)

Building the MCP server itself was actually straightforward. FastMCP abstracts away all the protocol complexity, so you just write Python functions and decorate them with @mcp.tool().

Here’s a simplified version of one tool:

from mcp.server.fastmcp import FastMCP

import httpx

mcp = FastMCP("IT Ticket Portal")

@mcp.tool()

async def get_valid_priorities() -> str:

"""

Get the complete list of official priority levels for IT tickets.

Use this tool to retrieve all available priority options before

creating a ticket. Priority levels determine response time.

Returns:

JSON response containing priorities with names and descriptions.

"""

async with httpx.AsyncClient(timeout=10.0) as client:

response = await client.get(

"https://dev.logicweave.ai/webhook/priorities",

headers={"Authorization": "Bearer secret-token"}

)

response.raise_for_status()

return response.text

Clean, simple, worked perfectly on my machine. Created five tools following the same pattern:

get_valid_environments– List IT environmentsget_valid_topics– Valid ticket topicsget_valid_categories– Technical categoriesget_valid_priorities– Priority levelscreate_ticket– Actually create the ticket

Tested locally using the MCP Inspector tool. Everything worked. Tools showed up, Claude could call them, responses came back as expected.

Time to containerize and deploy to Azure.

Phase 2: Containerization (Where Things Started Breaking)

First attempt at the Dockerfile:

FROM python:3.12-slim

WORKDIR /app

COPY requirements.txt .

RUN pip install -r requirements.txt

COPY . .

CMD ["python", "server.py"]

Built the image. Ran it locally. Worked fine.

Pushed to Azure Container Registry:

az acr login --name myregistry

docker build -t myregistry.azurecr.io/mcp-server:latest .

docker push myregistry.azurecr.io/mcp-server:latest

No problems yet. The pain was about to start.

Problem #1: The Port Binding Nightmare

Deployed to Azure Container Apps using the Azure CLI:

az containerapp create \

--name mcp-ticket-server \

--resource-group python-mcp-rg \

--environment mcp-env \

--image myregistry.azurecr.io/mcp-server:latest \

--target-port 8000 \

--ingress external

Deployment succeeded. Container started. Checked the logs: “Server running on 0.0.0.0:8000”

Tried to access the MCP endpoint: Connection refused

Spent 30 minutes checking firewall rules, environment variables, container logs. Everything looked fine. Server was running. Port was exposed. But nothing was accessible.

Finally checked the Azure Container Apps configuration in the portal.

The ingress was routing to port 80.

My FastMCP server was listening on 8000. Azure was sending traffic to 80. No wonder nothing worked.

The fix: Explicitly tell FastMCP which port to use AND make sure Azure knows about it:

# In server.py

if __name__ == "__main__":

mcp.run(transport="sse", port=8000, host="0.0.0.0")

# In deployment command - add --target-port

az containerapp create \

--name mcp-ticket-server \

--resource-group python-mcp-rg \

--environment mcp-env \

--image myregistry.azurecr.io/mcp-server:latest \

--target-port 8000 \

--ingress external \

--transport http2

Lesson learned: Azure Container Apps defaults to port 80 for HTTP traffic. If your app listens on a different port, you MUST specify --target-port. The error messages don’t tell you this—you just get connection refused.

Problem #2: The Authentication Token Dance

With port issues resolved, I could access the health check endpoint. Great progress.

Tried to actually use the MCP tools from Claude: 401 Unauthorized

My server requires a bearer token for security. I’d set it as an environment variable in the container. Checked the Azure portal—environment variable was there. Checked the container logs—token was loading correctly.

But every request was coming back unauthorized.

Here’s what I was doing wrong:

# My naive first attempt

@mcp.tool()

async def get_valid_priorities() -> str:

async with httpx.AsyncClient() as client:

response = await client.get(

"https://dev.logicweave.ai/webhook/priorities",

headers={"Authorization": f"Bearer {os.getenv('WEBHOOK_TOKEN')}"}

)

Looks fine, right? Token is in the environment, we’re passing it in the header.

The problem: FastMCP’s authentication happens at the MCP protocol level, not at the individual tool level.

I was checking auth on the webhook endpoints but forgetting that Claude needs to authenticate with the MCP server itself first. Azure Container Apps was receiving unauthenticated MCP requests and correctly rejecting them.

The fix: Configure FastMCP to require authentication:

# server.py

import os

from mcp.server.fastmcp import FastMCP

mcp = FastMCP("IT Ticket Portal")

# Set up authentication middleware

MCP_API_KEY = os.getenv("MCP_API_KEY")

if not MCP_API_KEY:

raise ValueError("MCP_API_KEY environment variable required")

@mcp.server.middleware

async def check_auth(request, call_next):

auth_header = request.headers.get("Authorization")

if not auth_header or not auth_header.startswith("Bearer "):

return {"error": "Missing authentication"}

token = auth_header.replace("Bearer ", "")

if token != MCP_API_KEY:

return {"error": "Invalid token"}

return await call_next(request)

Then in Azure Container Apps, set the environment variable securely:

az containerapp create \

--name mcp-ticket-server \

--resource-group python-mcp-rg \

--environment mcp-env \

--image myregistry.azurecr.io/mcp-server:latest \

--target-port 8000 \

--ingress external \

--secrets mcp-api-key=<your-secure-key> \

--env-vars MCP_API_KEY=secretref:mcp-api-key

Lesson learned: MCP servers need protocol-level authentication, not just per-request auth. Use Azure Container Apps secrets for sensitive values, not plain environment variables.

Problem #3: Health Checks That Lie

Container kept restarting. Azure portal showed “Unhealthy”. Container logs showed the server starting up fine, listening on the right port, ready to accept connections.

But Azure kept killing it and restarting.

Turns out Azure Container Apps has health probe requirements, and my FastMCP server wasn’t meeting them by default.

The problem: Azure was hitting / for health checks. FastMCP doesn’t serve anything at /. It serves the MCP protocol at /mcp and Server-Sent Events at /sse.

When the health check failed, Azure assumed the container was broken and restarted it. Except the container was fine—it just wasn’t serving what Azure expected at the health check endpoint.

The fix: Add a dedicated health endpoint:

from starlette.applications import Starlette

from starlette.responses import PlainTextResponse

from starlette.routing import Route

async def health_check(request):

return PlainTextResponse("OK", status_code=200)

routes = [

Route("/health", endpoint=health_check),

]

# Wrap FastMCP with Starlette for custom routes

from starlette.applications import Starlette

app = Starlette(routes=routes)

# Mount MCP handler

@app.route("/mcp", methods=["POST"])

async def mcp_handler(request):

payload = await request.json()

result = await mcp.handle_http(payload)

return JSONResponse(result)

if __name__ == "__main__":

import uvicorn

uvicorn.run(app, host="0.0.0.0", port=8000)

Then configure Azure to use the health endpoint:

az containerapp create \

--name mcp-ticket-server \

--resource-group python-mcp-rg \

--environment mcp-env \

--image myregistry.azurecr.io/mcp-server:latest \

--target-port 8000 \

--ingress external \

--secrets mcp-api-key=<your-secure-key> \

--env-vars MCP_API_KEY=secretref:mcp-api-key

# After creation, update health probes

az containerapp update \

--name mcp-ticket-server \

--resource-group python-mcp-rg \

--set-env-vars HEALTH_PATH=/health

Or configure via YAML in the Azure portal:

probes:

- type: liveness

httpGet:

path: /health

port: 8000

periodSeconds: 10

failureThreshold: 3

Lesson learned: Azure Container Apps health probes are non-negotiable. If you don’t serve a 200 OK at the health check path, your container will restart infinitely. Add a dedicated /health endpoint to any production container.

What Finally Worked: The Production Setup

After fighting through those three major issues, here’s the final architecture that actually works:

Dockerfile:

FROM python:3.12-slim

# Install uv for faster package installation

RUN pip install uv

WORKDIR /app

COPY requirements.txt .

RUN uv pip install --system -r requirements.txt

COPY . .

# Health check for Docker (Azure has its own too)

HEALTHCHECK --interval=30s --timeout=10s --start-period=5s --retries=3 \

CMD python -c "import httpx; httpx.get('http://localhost:8000/health')"

EXPOSE 8000

CMD ["python", "server.py"]

Server with all fixes:

import os

from mcp.server.fastmcp import FastMCP

from starlette.applications import Starlette

from starlette.responses import PlainTextResponse, JSONResponse

from starlette.routing import Route

import uvicorn

mcp = FastMCP("IT Ticket Portal")

MCP_API_KEY = os.getenv("MCP_API_KEY")

if not MCP_API_KEY:

raise ValueError("MCP_API_KEY required")

# All your @mcp.tool() decorated functions here

# ... (5 tools as shown earlier)

# Health check endpoint

async def health(request):

return PlainTextResponse("OK")

# MCP handler endpoint

async def mcp_handler(request):

# Check authentication

auth_header = request.headers.get("Authorization", "")

if not auth_header.startswith("Bearer "):

return JSONResponse({"error": "Missing auth"}, status_code=401)

token = auth_header.replace("Bearer ", "")

if token != MCP_API_KEY:

return JSONResponse({"error": "Invalid token"}, status_code=401)

# Process MCP request

payload = await request.json()

result = await mcp.handle_http(payload)

return JSONResponse(result)

# Create Starlette app with routes

routes = [

Route("/health", endpoint=health),

Route("/mcp", endpoint=mcp_handler, methods=["POST"]),

]

app = Starlette(routes=routes)

if __name__ == "__main__":

uvicorn.run(app, host="0.0.0.0", port=8000)

Complete deployment command:

# Create resource group

az group create --name python-mcp-rg --location eastus

# Create Container Apps environment

az containerapp env create \

--name mcp-environment \

--resource-group python-mcp-rg \

--location eastus

# Create container registry

az acr create \

--resource-group python-mcp-rg \

--name mcpregistry123 \

--sku Basic

# Build and push image

az acr build \

--registry mcpregistry123 \

--image mcp-server:latest \

--file Dockerfile .

# Deploy container app with all the fixes

az containerapp create \

--name mcp-ticket-server \

--resource-group python-mcp-rg \

--environment mcp-environment \

--image mcpregistry123.azurecr.io/mcp-server:latest \

--target-port 8000 \

--ingress external \

--transport http2 \

--min-replicas 1 \

--max-replicas 3 \

--secrets mcp-api-key=<your-secure-256-bit-key> \

--env-vars MCP_API_KEY=secretref:mcp-api-key \

--registry-server mcpregistry123.azurecr.io \

--registry-identity system

Results:

- Container starts successfully ✓

- Health checks pass ✓

- Authentication works ✓

- Claude can discover and call all 5 tools ✓

- Ticket creation takes 10 seconds instead of 5 minutes ✓

What I Learned (The Hard Way)

1. Azure Container Apps != Docker on a VM

I came into this thinking “it’s just a container, works locally, should work in Azure.” Wrong. Azure Container Apps has opinions about ports, health checks, and how containers should behave. Those opinions aren’t well-documented in the “getting started” guides.

2. Port Configuration Matters Everywhere

Your application listens on a port. Your Dockerfile exposes a port. Your Azure deployment targets a port. Your ingress routes to a port. All four need to match. Miss one and you get cryptic connection errors.

3. Health Checks Are Non-Optional

In development, you don’t need health checks. In production on Azure, they’re the difference between a stable service and an infinite restart loop. Add /health endpoints to everything.

4. Authentication Layering Is Tricky

MCP protocol authentication is separate from your backend API authentication. You need both. And Azure Container Apps secrets are the right way to manage credentials—not environment variables in docker-compose files.

5. FastMCP Defaults Assume STDIO Transport

Most MCP documentation shows local STDIO connections for use with Claude Desktop. Azure requires HTTP/SSE transport. You need to explicitly configure this or your server won’t accept remote connections.

6. Container Logs Are Your Only Friend

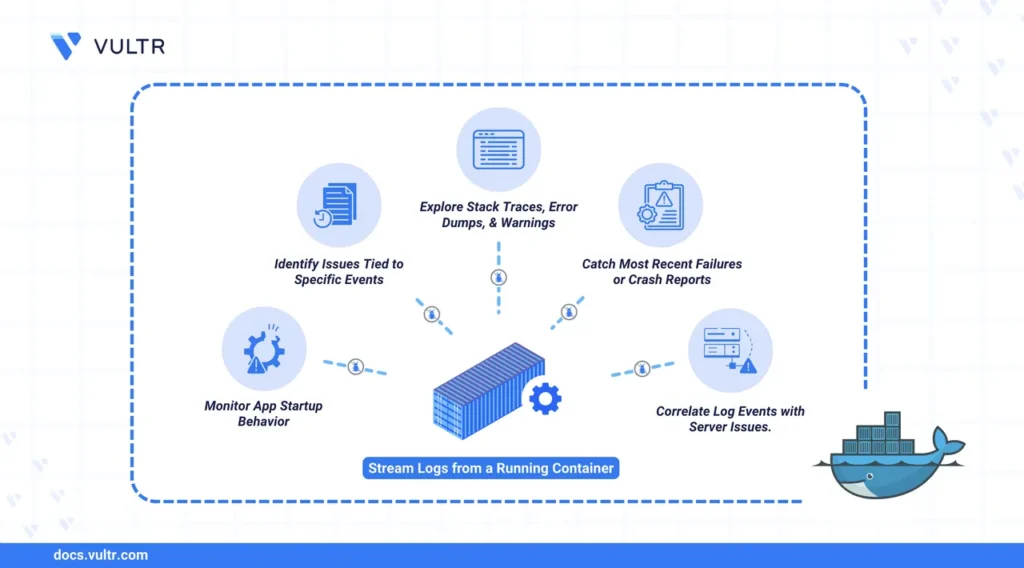

When things break in Azure, the portal tells you “unhealthy” and nothing else. SSH into the container isn’t an option. You live and die by container logs. Use az containerapp logs show religiously.

Azure Container Apps vs VM + Docker

I’ve deployed both ways now. Here’s my honest take:

Azure Container Apps wins when:

- You want zero infrastructure management

- You need automatic scaling (0 to N instances)

- You’re building for production with proper HTTPS/auth

- You want built-in monitoring and logging

- You need it deployed yesterday

VM + Docker wins when:

- You need full control over networking (custom VPN, specific ports)

- You’re running multiple containers with complex orchestration

- You want to SSH in and debug directly

- Cost optimization is critical (VMs can be cheaper for high-traffic apps)

- You need to run non-container workloads too

For this MCP server, Azure Container Apps was the right choice. I don’t want to manage VMs, set up Traefik, handle SSL certificates, or configure systemd services. I want to push code and have it running securely in minutes.

Once I understood the port/health/auth triad, Azure Container Apps became exactly what I needed.

The Real Cost Breakdown

Development time:

- Building MCP server locally: 4 hours

- Fighting Azure deployment issues: 6 hours (would’ve been 2 with this blog)

- Writing health checks and proper auth: 1 hour

- Total: 11 hours

Azure costs (estimate):

- Container Apps environment: $0/month (consumption-based)

- Container Apps instance: ~$5/month (with 1-3 replicas, minimal traffic)

- Container Registry: $5/month (Basic tier)

- Total: ~$10/month

Compare that to running a VM:

- B1s VM: $10/month

- Managed disk: $5/month

- Public IP: $3/month

- Total: ~$18/month

Plus you’re managing OS updates, Docker, networking, SSL, and everything else yourself.

The Container Apps pricing is better AND you don’t manage infrastructure. Clear winner for small-scale MCP servers.

What’s Next

Now that I understand how Azure Container Apps works with FastMCP, I’m planning to migrate a few other MCP servers:

- Supabase MCP Server – Direct database access for Claude

- GitHub Integration MCP – Repository management tools

- Analytics MCP – Business metrics and reporting

Each one will use the same pattern:

- Build with FastMCP

- Add

/healthendpoint - Configure proper port binding

- Use Azure secrets for credentials

- Deploy with

az containerapp create

Takes about 30 minutes per server now that I know the gotchas.

The Bottom Line

Deploying a Python MCP server to Azure Container Apps is straightforward once you know the three critical requirements:

- Port consistency – Application port, EXPOSE, –target-port, and ingress must all match

- Health endpoint – Azure will kill containers without a working health check

- Protocol auth – Secure the MCP endpoint itself, not just individual tools

Miss any of those three and you’ll spend hours debugging cryptic errors.

But get them right and you have a production-ready, auto-scaling, managed MCP server running in minutes. No VMs, no Traefik config, no SSL certificate management. Just push code and it runs.

Building AI integrations in 2025 means choosing the right infrastructure. Sometimes managed services with opinions are better than infinite flexibility with infinite maintenance.

Have you deployed MCP servers to Azure or other cloud platforms? I’d love to hear about your experiences—especially if you hit different issues than the port/health/auth triad I ran into. What gotchas did you discover?

Drop a comment or reach out. Always down to compare notes on making AI infrastructure actually work in production.

As an n8n automation specialist pursuing my AWS Solutions Architect certification, I’m constantly evaluating different cloud platforms for client work. Azure Container Apps proved to be solid for managed container deployments—once you understand how it actually works vs how the getting-started docs make it sound.