So Microsoft and Google both had their big keynotes recently, and honestly, they got me pretty excited about the current state of agent development. Google was pushing their Agent Development Kit with MCP (Model Context Protocol), while Microsoft was all-in on AI Foundry. Different approaches, but both seemed like they could be game-changers.

Here’s the thing though – I’m native to AWS. That’s my comfort zone, my go-to for client work. But I figured having the ability to build agents across all three major cloud platforms would be a solid skill set to have. Flexibility is everything in this business.

Perfect timing too, since I was working with an enterprise client who was just starting to explore their software options for AI agents and tools. I’d been showing them n8n and how it could fit into their workflow, and we started talking about post-deployment monitoring – you know, the unglamorous but critical stuff that separates proof-of-concepts from production-ready systems.

Since they were already deep in the Azure ecosystem, I figured it was time to see what Microsoft’s AI Foundry was really about.

The Initial Promise (And Quick Win)

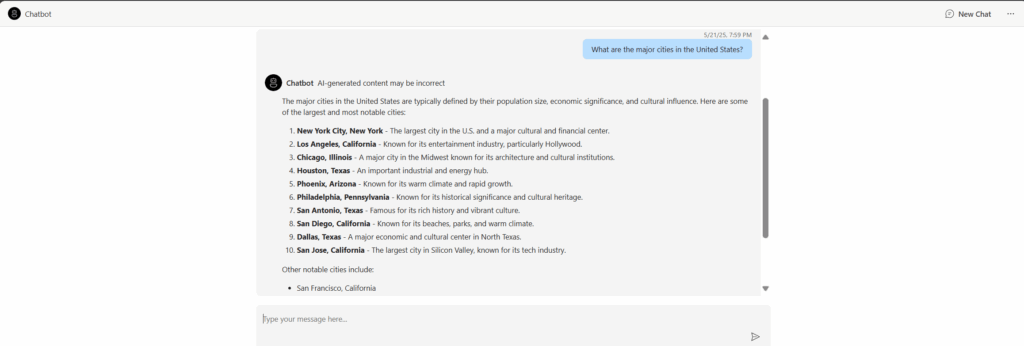

I’ll give Microsoft credit – they made it easy to get started. They had this template that deployed all the necessary resources and code to chat with an agent right out of the gate. The traces were automatically sent back to AI Foundry, and I could add tools to the agent and start chatting immediately.

For about 10 minutes, I thought, “Damn, this is actually pretty slick.”

Then reality hit.

The First Red Flag: Missing Tool Traces

Here’s where things got interesting (and by interesting, I mean frustrating). The platform wasn’t logging my custom tool usage. At all.

I spent way too much time searching for answers – documentation, forums, GitHub issues, you name it. Nothing. The best conclusion I could reach was that this had to be a bug worth reporting. But here’s the thing about enterprise software: when you can’t find anyone else complaining about an obvious issue, you start questioning if you’re the problem.

Building My Own Frontend (Because Why Not?)

Rather than wait around for Microsoft to fix whatever was broken, I decided to build my own frontend to interact with the Azure AI Foundry agents. Built out the full session management – creating sessions, deleting sessions, the whole nine yards. Added a clean UI for chatting with the agent.

And here’s where I hit the real wall: streaming messages and tool tracing don’t play nice together.

I tried everything. Scoured the documentation. Looked for examples. Nothing. Apparently, you can have real-time streaming responses OR you can have proper tool tracing, but not both at the moment. At least not with any examples or documentation showing how to make it work.

The Good Stuff (Yes, There Was Some)

Don’t get me wrong – I wasn’t completely wasting my time. I got hands-on experience with some genuinely useful features:

- Evaluations and Red Teaming: Actually pretty solid for testing agent reliability

- Continuous Evaluations and Metrics: Good monitoring capabilities once you get everything set up

- Tracing: When it works, it’s comprehensive

- Content Filters: Decent controllability for enterprise compliance

- RAG Knowledge Base Setup: Straightforward implementation

I also got to spin up VMs and deploy containerized applications in Azure, which was new territory for me. Deployed a Foundry project and model in one container, chat app resources in another. Good learning experience from an infrastructure standpoint.

The Deal Breaker: Limited Model Selection

Here’s what really killed it for me: the lack of model variety.

Look, I get it – Microsoft has partnerships and business relationships to maintain. But when you’re building real solutions for real clients, you need options. Different use cases require different models, and being locked into a limited selection just doesn’t cut it in 2024.

Sure, there are ways to integrate some of these tools with model-agnostic frameworks like LangChain, but at that point, why am I limiting myself to Azure’s ecosystem?

The Bottom Line

Azure AI Foundry has some solid pieces. The monitoring capabilities are legit, the deployment process is smooth, and the enterprise features are there. But the platform feels half-baked in critical areas.

The streaming/tracing issue alone would be a non-starter for most production applications. Add in the limited model selection, and you’re looking at a platform that’s not quite ready for the flexibility that modern AI development demands.

My takeaway? Microsoft is moving in the right direction, but they’re not there yet. For now, I’ll stick with more flexible solutions that don’t force me to choose between real-time responses and proper observability.

Will I revisit Azure AI Foundry in six months? Maybe. But they’ve got some fundamental issues to sort out first. And yeah, I’m still curious about Google’s Agent Development Kit – that might be my next exploration.

Building AI solutions that actually work in production means making hard choices about platforms and tools. Sometimes the shiny new option isn’t the right option – at least not yet.